Are we ditching keypads for good in AR/VR?

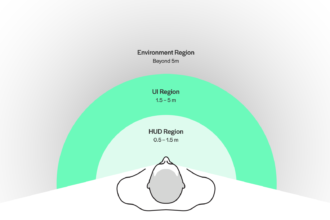

We are slowly moving towards a world where augmented and virtual reality headsets (hereon referred to as headsets) are slowly making their way to our workplaces and homes 6. This gradual transition is has sparked an evolution of interfaces from bare minimum elements to full-fledged spatial computing 7. This is not an easy transition since we are accustomed to a certain way of interacting with our devices (flat/2D interfaces). One such complex case is how to input text with the least amount of friction. Hence we have a growing need to find better methods to input text which can provide a better user experience.

Ergonomics

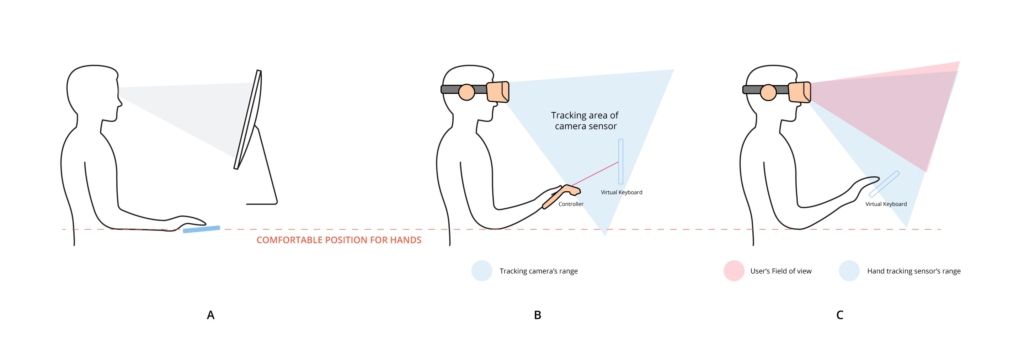

Typing as an activity requires close attention to ergonomics since it is a crucial part of our lives and we spend a significant amount of time using keyboards/keypads. And in terms of ergonomics, keyboard placement is can prove to be a make or break experience.

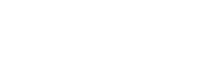

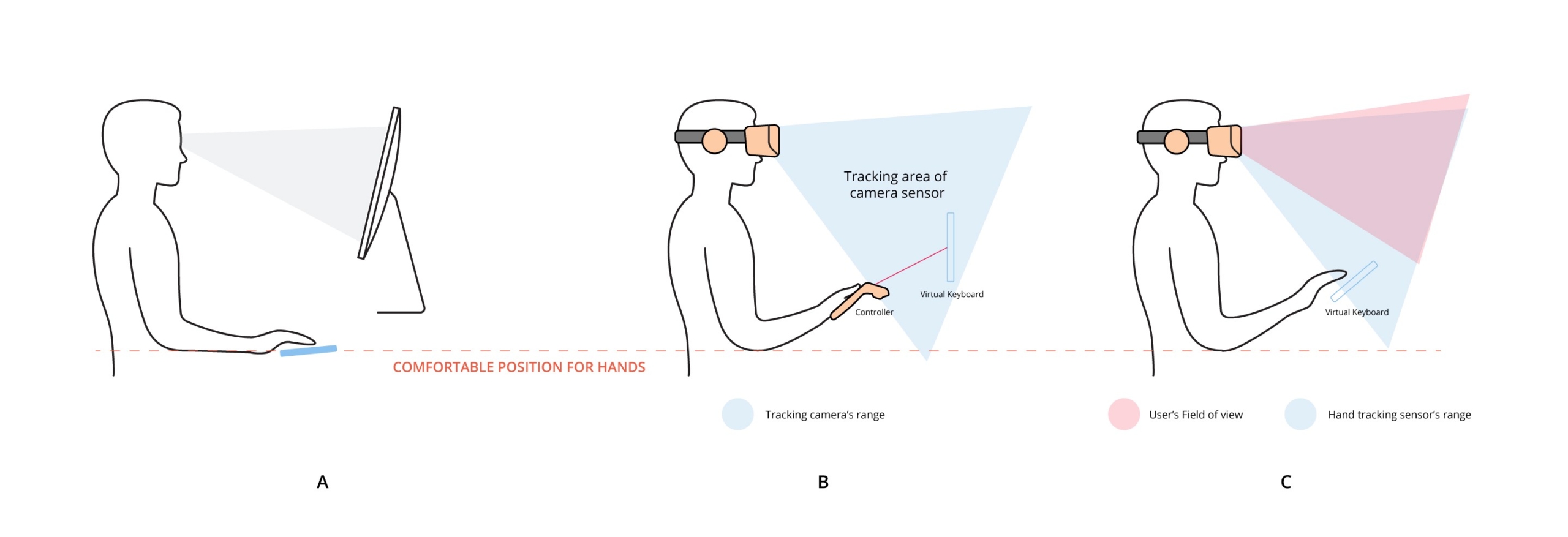

Ideally, it should be at/below the elbow level (Figure 2A) which is not the case in most of the current input methods available in the headsets. Either they are placed in front of the user for controller-based input (Figure 2B) or placed slightly tilted in front of the user in hand tracking-based methods (Figure 1C). And because of that, fatigue is quite inevitable after prolonged use, since the users have to constantly move their hands carrying the weight of the controller in mid-air. Hand tracking method may feel natural but the limited range of field of view and hand tracking cameras restricts the user to place the virtual keypad in front of them (Figure 3), above the elbow level which results in fatigue.

The form factor of the keyboard is another aspect that affects typing experience. The curved keyboards might feel more ergonomic[3], whereas non-curved ones are expected to be more intuitive because of their familiarity (e.g. touchscreens). Having bigger virtual keys require less precise tracking techniques but as the size increases the user had to move his head around to see all the keyboard keys which might result in motion sickness and fatigue.

These are some known issues based on the widely used input methods at the moment. But as we explore other methods, we will have to keep in mind ergonomic challenges to come up with the best possible alternatives.

Virtual Keyboard (Controller-based)

Virtual Keyboards are the current standard in most VR headsets and applications. You move the pointer over the character <stop> at the character to be inputted and tap/click the physical button on the controller to register that character on the screen. It is quite a time-consuming process and doesn’t feel intuitive enough as compared to what we are accustomed to while using our current day to day input devices like a physical keyboard and touch screen keyboards in smartphones. The closest frustrating experience similar to this is using gaming controllers to input text in PS4/Xbox.

Benefits:

- The layout is similar to the current physical/touchscreen keyboard so the users can start using the method without any friction.

- It is a satisfying experience since you get haptic feedback once you tap/click the button on the controller.

Cons:

- Typing speed is slow hence typing long paragraphs is a cumbersome task.

- It can be tiring too because you have to constantly hold the controller which has its own weight.

- Extra effort is required to keep your hand in a raised position while typing, to make sure it is in the view of tracking cameras on the headset. So your hands are rarely in a resting position or at ease.

Exceptions:

The Google Daydream drum keyboard and Punchkeyboard are an exception to controller-based input. To operate them you just have to move the pointer over letters and virtually tap (move–tap) the characters unlike the three-step process of move–stop over the key–tap the physical button on the controller.

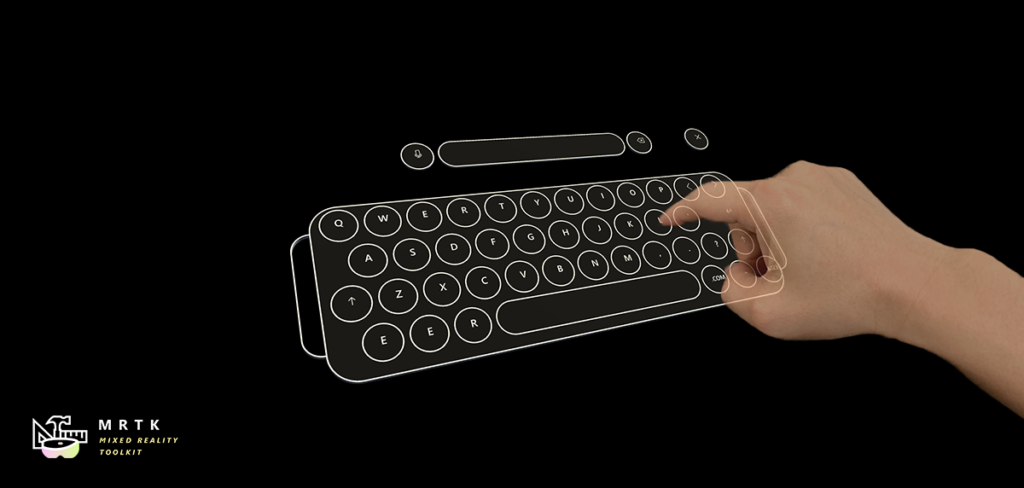

Hand tracking Keyboard

Hand tracking keyboards take the input like we usually type in the real world except for the fact that the physical keyboard is replaced by a virtual one. The clear benefit here is the speed of typing since it reduces the number of steps required to input a character. Move your hand and do the tap gesture as compared to the controller based input, where you move your pointer over the character wait for a moment <stop> and tap the physical button on the keyboard to register the input.

Pros:

- Since the whole process is quite similar to the current real-world application there is no effort required to learn anything new.

- It is faster as compared to controller-based input because of the ease of hand movement and reduced step.

Cons:

- This only works if the hand is in the range of cameras mounted on the headset, which brings up the ergonomics issue discussed earlier (Figure 2C).

- Public use of this type of input method is not that feasible until you are okay to be seen as an orchestra conductor in the middle of the street.

- There is no haptic feedback, hence initially it might feel like an alien experience. And getting used to it might take some time, however, the haptic feedback can be replaced with sound cues to inform the user that the keystroke has been registered. So that the user can focus on typing instead of keeping track of every character that is being entered.

Voice Input

Voice input has seen a boom in the last few years with voice assistants (Google Home, Amazon Alexa and Siri) finding home across the globe and now a lot of new hardware comes enabled with voice-enabled commands. However, if we talk about mass-scale adoption I don’t feel we are there yet because of the accuracy in terms of recognising different accents and the language coverage across different regions. Apart from this, I don’t see myself writing this article while dictating each word. By the time I will dictate the one line to correctly register it, I would have typed a couple of lines using other methods.

Pros:

- It is highly intuitive and we are familiar with it based on voice assistants on phones and household smart devices.

- It’s a hands-free experience so all the issues related to ergonomics in section 2 won’t hinder the overall experience.

Cons

- Language and accent barriers might introduce friction in wide-scale adoption across the userbase.

- Privacy issues can be a possibility since we don’t want to speak out loud publicly what we are talking about with friends on personal chats. And with surveillance in place in every nook and corner, it’s way too easy to decipher words by lip movements.

Wearables

Wearables are becoming the next big thing with the advent of successful products like the Apple watch and AR/VR headsets and another leap into that same family of products.

Gadgets like Ring or Tap require the user to wear an added accessory to input information. They might seem like a feasible option but it depends on the complexity of user experience (how they are designed to be operated). In the case of products like Ring which feel more intuitive, if executed well can do wonders since they allow the user to do a high level of customization based on their needs along with the ability to draw letters in the mid-air to input text.

Whereas Tap feels more like a whole new way to input text with a huge learning curve and it doesn’t seem like an accessory that you would want to wear most of the time.

Pros:

- Haptic feedback is very easy to attain.

- Typing speed can be comparatively faster than controllers since you just have to type by tapping (in Tap) or draw gesture in the air (in Ring).

- Ergonomically these are better since you don’t have to raise your hands to be in the view of the camera for tracking your hands.

Cons:

- Straps all over your finger like in case of Tap are not that socially acceptable and comfortable to be worn at all times.

- Steep learning curve if the typing pattern is changed. Like in case of Tap you have to learn a lot of combinations to type most of the characters (Figure 8). Example: to spell the word, “INTO”, you would tap the following sequence:

I = Middle Finger

N = Thumb+Index

T = Index+Middle

O = Ring Finger

Ring recommends a specific way of drawing letters so that they can be recognized by the sensors for correct input. Which means it will require time to get accustomed to.

- These are added accessory after all so it’s up to the user to decide if they want to invest in them mentally and physically considering we want to move to a simplified gadgets life.

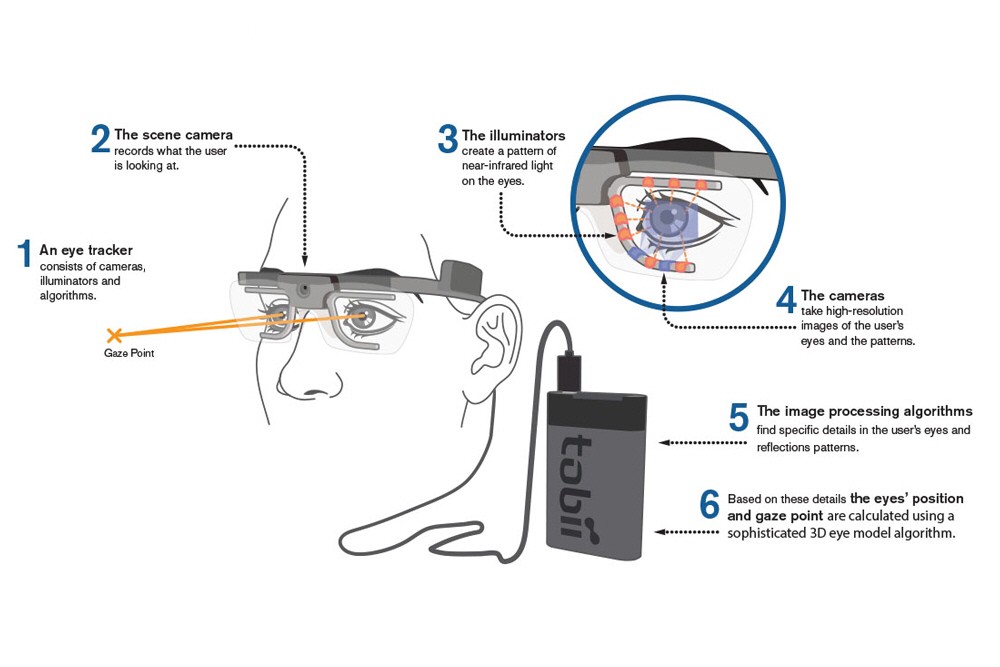

Eye-tracking

Eye-tracking works on the basic principle of tracking your gaze by bouncing infrared light off the eye and capturing the reflection using a camera, then data sensor processes the location of the eye to input the information (Figure 10). For typing text, the user will have to move their eyes across the keyboard and then rest their gaze (stare or blink) at the character which is supposed to type in. This is a private and hands-free free experience but I’m highly sceptical about the usage beyond a few strings of text.

Pros:

- The technology has reached a stable state and it is now supported by major headsets.

- Data being entered is quite safe and not easily accessible by people nearby.

- It’s a hands-free experience with no added accessories which the users will have to carry with you all the time.

- Quite intuitive considering the keyboard layout remains the same, just the way we input text shifts to tracking eyes.

Cons:

- The strain on the eyes can become a major issue considering people already suffer from accommodation vergence 8 in current headsets.

- The speed is questionable, though eyes muscles are considered to have fastest response time but the process of moving your gaze, stopping for a bit on the character to be inputted which in itself takes quite some time.

- Data collection via eye tracking is a privacy issue beyond just inputting information. Eyes tracking can give a huge array of information 9 about the person’s identity, sexual preferences, mood-based information which can be used by the platforms to target and invade their daily lives. This needs to be addressed before this can become an accepted method.

Brain-Computer interface

BCI is an emerging tech that has been an active area of research for more than half a century now. The idea is simple, to create a link between the brain and external devices so that the devices can be directly controlled by the brain without any physical interference. It’s not sci-fi anymore, retail solutions like MindRDR, MYNDPLAY, and Mindwave are already in the market with different levels of functionality. Though work is still required to fully develop it to use it as an input method for AR/VR headsets.

AlterEgo, a wearable silent speech output-input device developed by MIT Media Lab gives us a peek into how this technology can change the way we interact with our devices in our daily lives. The device is placed around the head, neck, and jawline which translates your brain speech centre impulse input into words without vocalization (see the video below).

Most of the currently available options are external devices however if the headset manufacturers can accommodate the sensors within the headset then it would be an ideal application. Apart from this and a few research prototypes include implants that are an invasive piece of tech which might not be a great option at least for the coming decade.

Pros

- Nothing can feel more natural than using our thoughts to operate these devices.

- It’s a handsfree experience so you don’t have to worry about any ergonomics issues.

- Blazing fast input speed, equivalent to the speed of thoughts.

- Highly accurate input of text.

- Ease of use, no physical strain and extra effort that we have been through right from the time when we started typing text with the advent of typewriters.

Cons:

- Can prove to be an invasive technology in case it is hacked.

- If the ethical guidelines are not implemented before the development of consumer products, the service providers/companies might take undue advantage of the data. Similar to how we are being targetted with ads these days on various platforms. With BMI they’ll have access to a wide variety of data that can be exploited.

- Application as brain implants might not be the ideal piece of tech that everyone would be willing to adopt.

- Medical effects of it on our body are still not clear and it will take time to develop and study the effects of it.

- It will take a good amount of time before the consumer-ready version is available with full functionality.

Quick-fix techniques

For the users who don’t want to transition into new ways to enter the text, the hardware manufacturers and researchers are trying to innovate over the existing tech.

Logitech HTC Vive Bridge

Logitech has built a keyboard that bridges the physical keyboard with the virtual keyboard by mapping the physical keys in the virtual world. It is like a normal typing experience with a small difference that you see the virtual keyboard in place of the real one. Hand tracking enables you to see the placement of your fingers over keys so that there is no mismatch in keys you are tapping on and what you see in the virtual world (Figure 11).

SWiM Keyboard

Researchers from the University of St Andrews have come up with a new tilt-based entry keyboard SWiM for short: aka ‘Shape Writing in Motion’. At first glance, it may seem like gesture-based keyboard Swype, in which you move your finger over keys to input text. But in SWiM the major difference instead of dragging your thumb or fingers over the screen, you use the wrist movement to place the pointer over the characters to be typed (Figure 12). One thing that makes it unique is the one-hand operation.

The research 10 claims that first time users who tested the tilt-based input system were able to achieve a rate of 15 words per minute after minimal practice, and a rate of 32 wpm after around 90 minutes of practice. In the paper, they describe SWiM AS “fast and easy to learn compared to many existing single-handed text entry techniques.” The researchers are planning to implement the techniques in AR/VR headsets since they come with built-in tilt sensors (I’m not sure if I want to wobble my head to type text using this tech).

We have discussed multiple methods of entering text in headsets and it seems like no single method is quite ready for universal application across different scenarios. In my opinion, for now, we’ll continue to use different technologies to enter text based on the scenarios, voice input for fast and short commands vs using a headset optimised physical keyboard for longer inputs like articles books etc.

And as the market grows we might see new technologies coming including the better(feasible) versions of currently being explored such as integrations with wearables/smartwatches (Figure 13).

Further Reading

[a] Introduction to Shape Writing, https://www.researchgate.net/publication/285681933_Introduction_to_Shape_Writing

[b]Text Entry in Immersive HMDisplay-based VR using Physical and Touch Keyboards, https://www.microsoft.com/en-us/research/uploads/prod/2018/02/2018_ieee_vr_reposition_cam_ready.pdf

[c] How do we solve typing in AR?https://www.reddit.com/r/augmentedreality/comments/d4my58/discussion_how_do_we_solve_yping_in_ar/

References

- 2019 Was a Major Inflection Point for VR — Here’s the Proof, https://www.roadtovr.com/2019-major-inflection-point-vr-heres-proof/?fbclid=IwAR3agMYc4OZXwKH9Pe8gtRGMZt246eDpl8ygtcK0JhDDx8JBkvgbZ713adI

- A new era of spatial computing brings fresh challenges — and solutions — to VR, https://www.microsoft.com/en-us/research/blog/a-new-era-of-spatial-computing-brings-fresh-challenges-and-solutions-to-vr/

- Vergence-Accommodation Conflict, https://xinreality.com/wiki/Vergence-Accommodation_Conflict

- The Eyes Are the Prize: Eye-Tracking Technology Is Advertising’s Holy Grail, https://www.vice.com/en_us/article/bj9ygv/the-eyes-are-the-prize-eye-tracking-technology-is-advertisings-holy-grail

- Investigating Tilt-based Gesture Keyboard Entry for Single-Handed Text Entry on Large Devices, https://www.researchgate.net/publication/316709057_Investigating_Tilt-based_Gesture_Keyboard_Entry_for_Single-Handed_Text_Entry_on_Large_Devices

- 2019 Was a Major Inflection Point for VR — Here’s the Proof, https://www.roadtovr.com/2019-major-inflection-point-vr-heres-proof/?fbclid=IwAR3agMYc4OZXwKH9Pe8gtRGMZt246eDpl8ygtcK0JhDDx8JBkvgbZ713adI

- A new era of spatial computing brings fresh challenges — and solutions — to VR, https://www.microsoft.com/en-us/research/blog/a-new-era-of-spatial-computing-brings-fresh-challenges-and-solutions-to-vr/

- Vergence-Accommodation Conflict, https://xinreality.com/wiki/Vergence-Accommodation_Conflict

- The Eyes Are the Prize: Eye-Tracking Technology Is Advertising’s Holy Grail, https://www.vice.com/en_us/article/bj9ygv/the-eyes-are-the-prize-eye-tracking-technology-is-advertisings-holy-grail

- Investigating Tilt-based Gesture Keyboard Entry for Single-Handed Text Entry on Large Devices, https://www.researchgate.net/publication/316709057_Investigating_Tilt-based_Gesture_Keyboard_Entry_for_Single-Handed_Text_Entry_on_Large_Devices

Leave a Reply