Variables that affect the experience in augmented reality

The augmented reality headsets are getting better with time and I was happy to see that the new Hololens 2 addresses some of these major problems that hinder the seamless experience in AR. Similar to the last article, in which we discussed the current state of the text in AR, this article focuses on the major hurdles which affect the typography and overall experience in AR headsets. Overcoming these issues can push us towards the next generation of AR headsets that will be more immersive as compared to what we have at the moment.

1. Focus

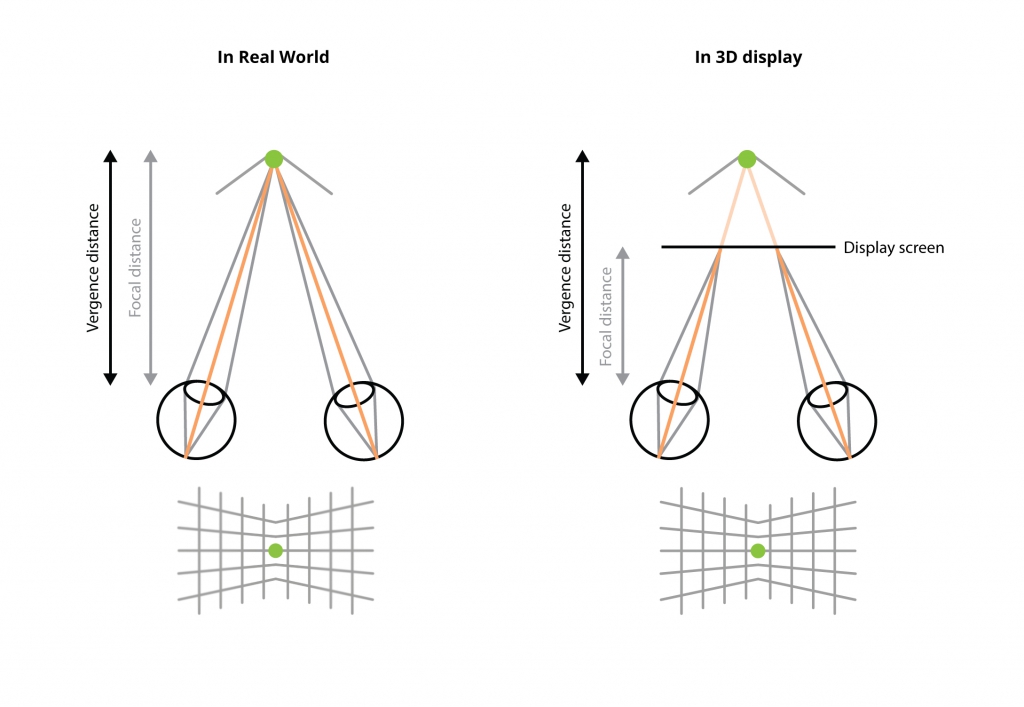

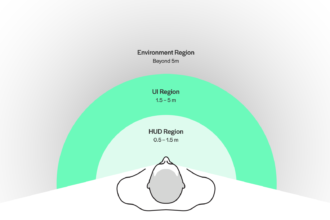

Human eyes have a specific aperture that is responsible for the limited depth of field. Only a specific range of the objects stays in focus, and everything outside that range appears blurred, although eyes can accommodate (focus) at varying distances. Accommodating can happen as a reflex to vergence (independent rotation of eyeballs) to fixate on a point in space; it can also be controlled consciously. Humans can accommodate a certain distance by changing the shape of the elastic lenses behind the pupils. In stereoscopic 1 headsets and flat screens are used to simulate the depth of field which requires the user to stare at the screen inches away from their eyes. However, the eyes focus on a point in the simulated world that is much further away (see figure 1). It results in conflicting signals about the focus and eye alignment known as the accommodation-vergence conflict which causes visual fatigue.

Reading the text in an AR environment is affected by this, for example, a text label with information about a building. The user must switch focus back and forth between the building facade plane and the display image plane (where the text lies). Looking at the building facade plane user sees the details of the building and the text will be blurred and vice versa. Any co-placement of virtual and real objects will suffer from this problem unless the objects’ depth matches the image plane. Accommodation-vergence conflict is one of the factors which affects the prolonged use of these headsets.

Hololens 2 offers responsive focus based on eye-tracking in real-time. Wherein the eye-tracking camera/sensors identify objects the user is focusing on and adjust the image plane according to the user’s point of focus.

2. Field of view

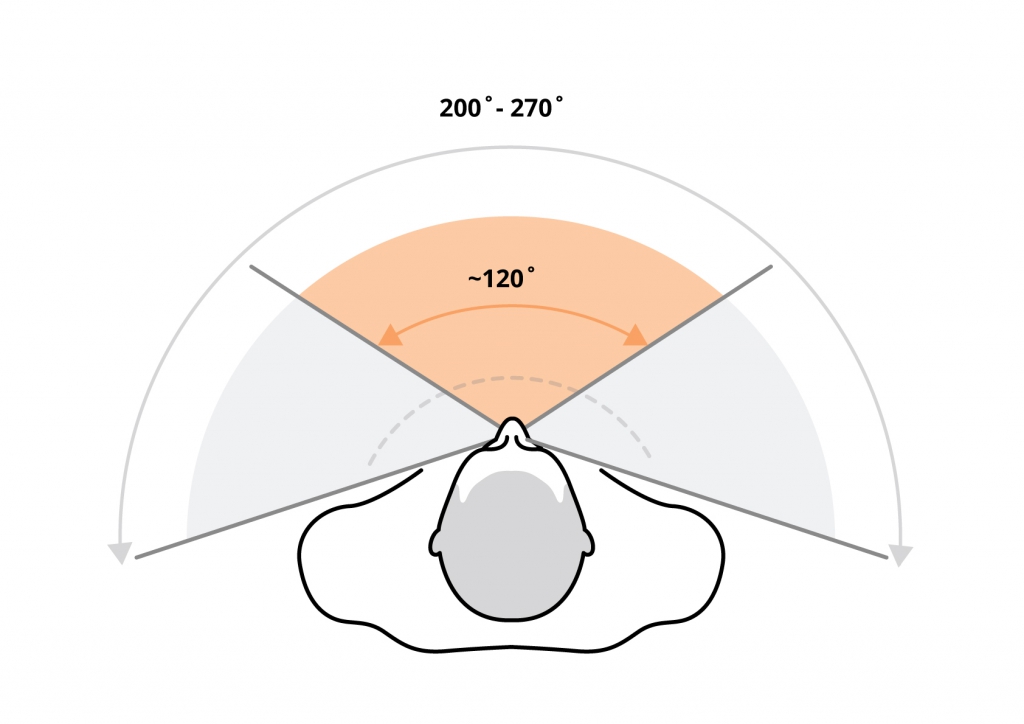

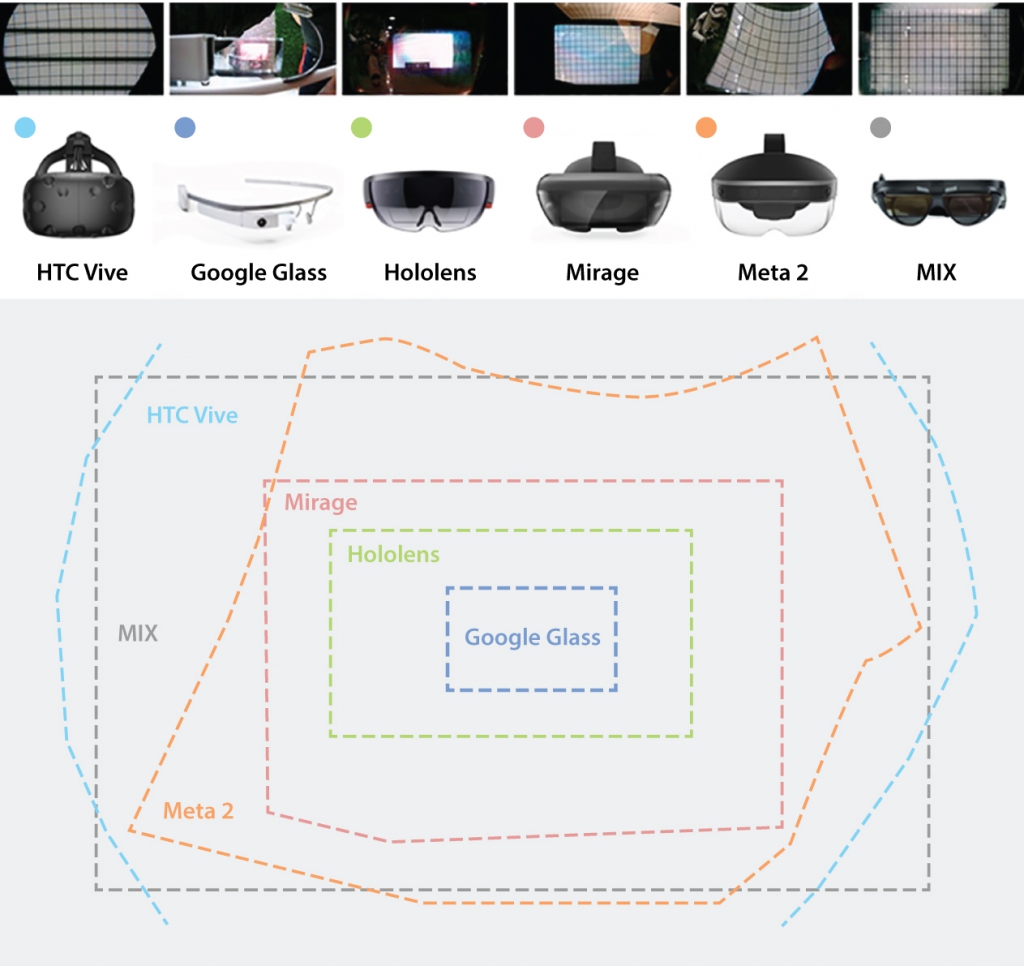

Field of view (FOV) is the range of the observable world at a given point in time which is measured in degrees. Humans have approximately 120 degrees FOV when directly looking in front and up to 200 to 270 degrees with eye rotation (see figure 2). In AR field of view is distinguished into overlay FOV (of the headset) and peripheral FOV (of the human eye). The headset FOV is where all computer-generated graphics are shown whereas the peripheral FOV contains the natural, non-augmented section of the observed environment.

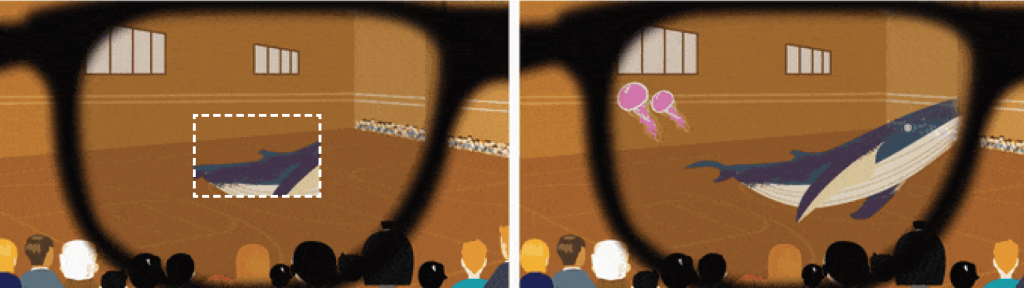

Current headsets have FOV ranging between 40–100 degrees which is relatively low as compared to the human eyes; therefore, the user sees cropped images of the objects which are closer to the eyes in space (see figure 3). To see the full object either the user has to step back or move their head around hence a wider FOV is preferred for better immersion. From a typography perspective, this limits the width of the paragraph that should be used in order to provide a better user experience.

3. Brightness and contrast

It is hard to produce sufficient brightness to achieve good contrast in see-through displays, especially outdoors where the ambient light is in abundance. Since OSTs (optical see-through headsets) allow the users to see the world directly, maximum brightness is required to compete with the brightness of the real world and make the virtual objects blend in or stand out in the scene. The contrast levels directly affect the text and its visibility.

4. Resolution and refresh rate

The resolution of the display is the crucial component that affects the level of details perceived by the human eyes. In the case of VST, it is limited to the resolution of the camera capturing the real world, and for OST it depends on the type of display. In AR headsets, the useful metric for resolution is pixels per degree (PPD). It is the number of pixels per degree it presents to the eye, the number of pixels in the horizontal display line has to be divided by the horizontal field of view provided by the optics of the headset (lens) 2. E.g. In a display with 1280 x 800 px, the pixels per eye are 640 x 800 px, and with a FOV of 90 degrees the PPD comes out to be 7.1 (640/90). This is way too low as compared to the retina resolution of the eye, the PPD of the human fovea is approximately 60 PPD. A lower pixel density can cause blurring of text, pixelation and the screen door effect (the visible fine lines between pixels on a display when seen up close). A higher PPD results in sharper and more realistic images, however, the higher pixel density of the display is not necessarily the same because the pixels on the screen are magnified through optics. The pixel magnification can be different for different devices with varying optics resulting in different pixel densities presented to the eye.

Another related component is refresh rate which means the number of times per second the display grabs a new image from the GPU and shows it to the viewer. It influences how display renders motion and becomes crucial for text rendering in the scene where the text is not stationary. A higher refresh rate produces better text.

5. Latency

Latency is the delay between action and reaction. In AR, if a person moves his head, the display should show the changes in the environment immediately. Any significant delay causes a conflict 3 in the brain which can result in nausea or motion sickness. High latency can cause misalignment of virtual elements in relation to the real world.

6. Occlusion

Occlusion happens when an object in 3D is blocking another object from view, it is one of the essential cues in an AR scene. For real objects it happens naturally; however, the virtual elements require correct occlusion for a better experience (see figure 5). Occlusion is easier in VST as compared to OST in which semi-transparent virtual elements are overlaid over the real world.

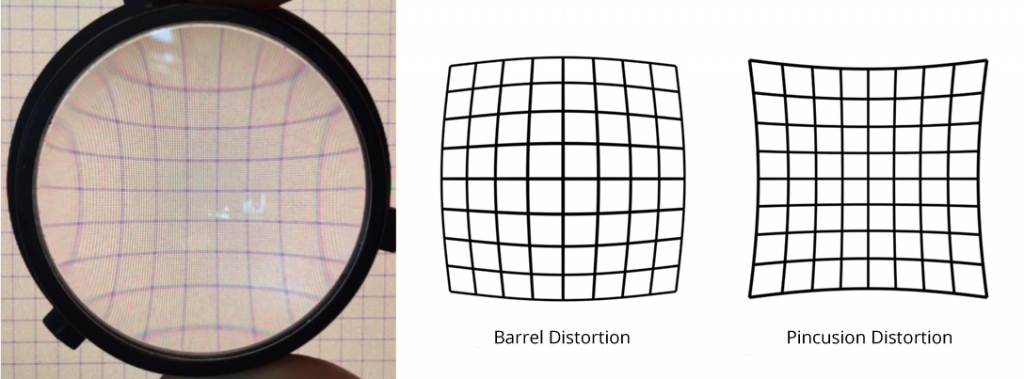

7. Distortions and aberrations

Every AR headset has an optical element (lenses, mirrors) that introduces a certain level of distortions (see figure 5) such as pincushion and barrel distortion. Along with that, the displays suffer from chromatic aberrations (colour fringing) the issue occurs due to the inability of the lens to focus all colours in the same place. The focal length (distance between the lens and the image sensor when the subject is in focus) depends on refraction, and since blue and red light has different indexes of refraction, lenses produce chromatic aberration which influences the perceived weight and the details of the letter. Additionally, the displays also suffer from irradiation, the excessive glow around the letters which sometimes fills up small negative spaces in the text. In some cases, the combined effect of both chromatic aberration and irradiation results in a bad reading experience.

In the next article, I’ll share the factors that affect visual perception in AR which would be a more detailed study of how the variables listed above impact human perception while reading on AR headsets.

Endnotes:

- Stereoscopic display conveys depth perception (3D details) by using of stereopsis phenomenon in binocular vision.

- Understanding Pixel Density & Retinal Resolution, and Why It’s Important for AR/VR Headsets, https://www.roadtovr.com/understanding-pixel-density-retinal-resolution-and-why-its-important-for-vr-and-ar-headsets/

- It is caused by the discrepancies between the motion perceived from the screen of HMD and the actual motion of the user’s head and body. It results in motion sickness, which is developed when what our eyes see does not match how our heads move. Even a few seconds of discrepancy can cause an unpleasant sensation which includes dizziness, headache, nausea, vomiting and other discomforts.

Leave a Reply